Introduction

In today’s digital age, where speed and efficiency reign supreme, latency has become a critical factor in determining the success of online experiences. Whether it’s a website, an video platform, or an online game, users expect instantaneous responses and seamless interactions. In this article, we will explore the concept of latency, its significance, and how low latency can greatly enhance the user experience.

The Concept of Latency

Latency refers to the time delay between the initiation of a request and the corresponding response. It is often measured in milliseconds (ms) and can be influenced by various factors in the network infrastructure and client-server communication.

The Importance of Low Latency

Low latency is crucial for delivering a fast and responsive user experience. It ensures that users can access content quickly, navigate through websites effortlessly, and engage with interactive elements without any noticeable delays. In a highly competitive digital landscape, where attention spans are short, businesses need to prioritize low latency to retain users and gain a competitive edge.

How Low Latency Enhances User Experience

Achieving low latency directly impacts user experience in several ways. It minimizes frustrating loading times, reduces the likelihood of users abandoning websites or applications, improves SEO rankings, and enhances customer satisfaction. By prioritizing low latency, businesses can create a seamless and enjoyable user experience that keeps visitors coming back for more.

Understanding Latency

To grasp the importance of low latency, it’s essential to have a solid understanding of what latency is and the factors that contribute to it.

Definition and Explanation of Latency

Latency can be defined as the time it takes for a data packet to travel from its source to its destination. It encompasses various stages, including data transmission, processing, and response. Latency can occur in different parts of the communication chain, from the network infrastructure to the end-user device.

Factors Affecting Latency

- Network Infrastructure

The quality and efficiency of the network infrastructure play a crucial role in determining latency. Factors such as network congestion, routing protocols, and the distance between the client and server can impact latency. Well-designed and optimized networks with low latency routers, switches, and cables can significantly reduce latency.

- Transmission Mediums

The type of transmission medium used for data transfer can affect latency. Wired connections, such as fiber-optic cables, generally offer lower latency compared to wireless connections like Wi-Fi or cellular networks. The physical characteristics of the transmission medium, such as signal degradation or interference, can introduce latency as well.

- Server Response Time

The responsiveness of the server to incoming requests is another key factor in latency. A server with fast processing capabilities and optimized software can minimize the time taken to generate and send a response to the client. Factors like server load, resource availability, and efficient coding practices can impact server response time and subsequently affect latency.

- Client Device Performance

The performance and capabilities of the client device also contribute to overall latency. Processing power, memory, and network connectivity of the device can influence how quickly it can send and receive data. Older or underpowered devices may experience higher latency compared to newer and more powerful devices.

Measuring Latency

To understand and address latency-related issues, it’s essential to measure latency accurately. Here are two commonly used methods for measuring latency:

- Ping and Traceroute

Ping is a network diagnostic tool used to measure the round-trip time between a client and a server. It sends a small data packet (ICMP Echo Request) to the server and measures the time it takes for the server to respond (ICMP Echo Reply). Traceroute, on the other hand, helps identify the path and the network nodes that data packets traverse from the client to the server. It shows the latency introduced at each hop, allowing network administrators to pinpoint potential bottlenecks.

- Tools for Latency Testing

Various specialized tools are available for measuring latency and assessing network performance. These tools simulate real-world scenarios and provide detailed insights into latency, packet loss, and other network metrics. Examples include Iperf, Wireshark, and SolarWinds Network Performance Monitor. These tools help diagnose latency issues and aid in optimizing network infrastructure and server configurations.

Understanding the factors that contribute to latency and employing accurate measurement techniques are essential steps in improving and maintaining low latency. By addressing latency issues, businesses can deliver a seamless user experience that meets the expectations of today’s demanding digital users.

Low Latency: Benefits and Applications

Low latency is not only crucial for enhancing user experience but also has specific benefits and applications in various industries. Let’s explore some of the key areas where low latency plays a vital role.

Gaming and eSports

- Real-Time Responsiveness

Low latency is of utmost importance in gaming and eSports, where split-second decisions can determine success or failure. Gamers require immediate feedback and responsiveness from the game server to maintain smooth gameplay. Low latency ensures minimal delay between player actions and their impact on the game, providing a more immersive and enjoyable gaming experience.

- Competitive Advantage

In competitive gaming, low latency can provide a significant competitive advantage. Players with lower latency connections have faster reaction times, enabling them to outmaneuver opponents and make quicker, more precise moves. Reduced latency can be the difference between winning and losing in highly competitive gaming scenarios.

- Cloud Gaming and Low Latency

Cloud gaming relies heavily on low latency to deliver seamless gameplay experiences. With cloud gaming, the game runs on remote servers, and the video and audio streams are sent to the player’s device in real-time. Any latency’s in the transmission can result in input lag and disrupt the gaming experience. By minimizing latency’s, cloud gaming platforms can provide high-quality, lag-free gameplay to players regardless of their device’s processing power.

Financial Trading

- High-Frequency Trading (HFT)

In the world of financial trading, high-frequency trading (HFT) relies heavily on low latency. HFT algorithms execute trades within fractions of a second, aiming to capitalize on small price differentials in the market. Low latency’s enables traders to receive market data, analyze it, and execute trades with minimal delay, maximizing their chances of profiting from market fluctuations.

- Algorithmic Trading

Algorithmic trading strategies, which rely on complex algorithms to execute trades, also benefit from low latency’s. These algorithms need to process large amounts of data and make trading decisions in real-time. With low latency’s, algorithmic trading systems can execute trades quickly and take advantage of market opportunities before they disappear.

- Impact on Market Dynamics

Low latency in financial trading has a broader impact on market dynamics. It can contribute to increased market liquidity and more efficient price discovery. By reducing the time between trade orders and executions, low latency’s promotes fairness and equal opportunities for traders, ensuring a level playing field in the financial markets.

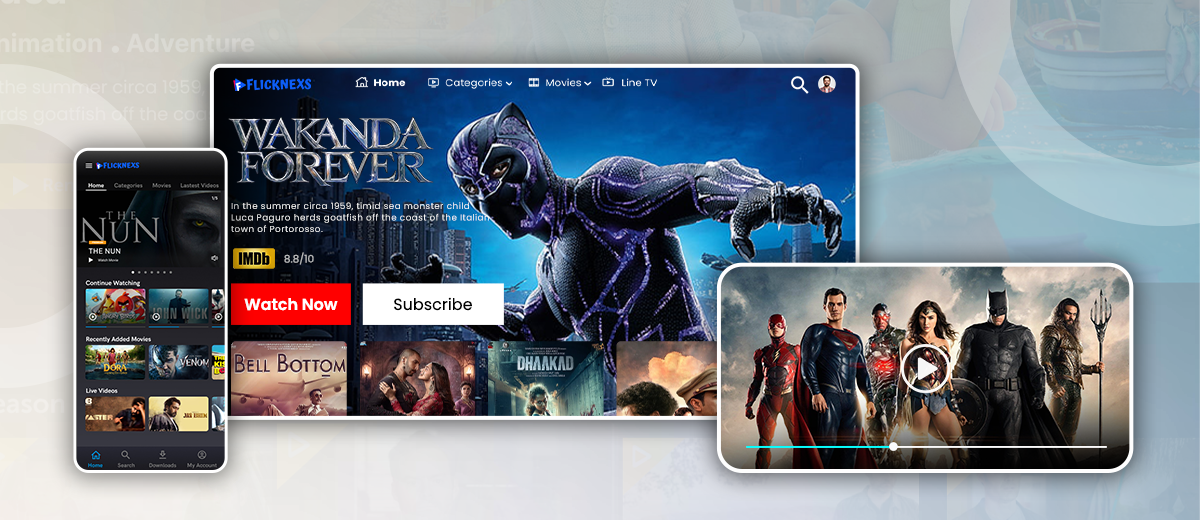

Video Streaming and Telecommunications

- Smooth Video Playback

Low latency is essential for delivering smooth video playback in streaming services. Buffering delays can be frustrating for viewers, leading to a poor user experience. With low latency’s, VOD platforms can transmit video data efficiently, minimizing buffering time and providing uninterrupted playback.

- Reduced Buffering Time

Low latency also reduces buffering time in video streaming applications. When latency’s is high, it takes longer for the video data to reach the viewer’s device, resulting in frequent pauses and interruptions. By minimizing latency’s, streaming platforms can deliver content more efficiently, reducing buffering and ensuring a seamless viewing experience.

- VoIP and Video Conferencing

Low latency is critical in real-time communication applications such as Voice over IP (VoIP) and video conferencing. Delays in voice or video transmission can disrupt conversations and make communication difficult. With low latency’s, participants can have more natural and fluid conversations, fostering better collaboration and productivity in remote work environments.

Low latency’s has become a necessity in industries where real-time responsiveness and seamless user experiences are paramount. By embracing and optimizing for low latency’s, gaming, financial trading, video streaming, and telecommunications industries can deliver exceptional services and stay ahead in an increasingly competitive digital landscape.

Technologies Enabling Low Latency

To achieve low latency, several technologies have emerged that help optimize network infrastructure and improve data transmission. Let’s explore some of the key technologies enabling low latency’s.

Content Delivery Networks (CDNs)

- Caching and Edge Servers

Content Delivery Networks (CDNs) are widely used to reduce latency’s and enhance content delivery. CDNs employ caching techniques where frequently accessed content is stored closer to end-users. By utilizing edge servers located geographically closer to the users, CDNs minimize the distance data has to travel, reducing latency’s and improving content delivery speed.

- Content Replication

CDNs also replicate content across multiple servers in different locations. This replication ensures that content is readily available from the nearest server, further reducing the time required to fetch and deliver the requested data. Content replication allows for efficient and low-latency distribution of content to users across the globe.

Edge Computing

- Moving Processing Power Closer to the User

Edge computing aims to bring computational power closer to the network’s edge, reducing latency’s by processing data locally rather than sending it back and forth to distant data centers. By deploying edge servers or devices at or near the network’s edge, data processing and analysis can occur in proximity to the end-user, minimizing latency’s and enabling faster response times.

- Benefits for Latency Reduction

Edge computing provides several benefits for reducing latency’s. By processing data locally, edge computing reduces the time required to transmit data to centralized data centers for processing. This enables real-time or near-real-time applications, such as IoT devices, autonomous vehicles, and smart cities, to operate with minimal latency’s, resulting in improved performance and user experience.

5G and Next-Generation Wireless Networks

- Ultra-Reliable Low Latency Communication (URLLC)

5G and next-generation wireless networks bring advancements in speed, capacity, and latency’s reduction. Ultra-Reliable Low Latency’s Communication (URLLC) is a key feature of 5G that guarantees low latency’s and high reliability for critical applications. URLLC enables use cases such as autonomous vehicles, remote surgeries, and industrial automation that require near-instantaneous response times.

- Millimeter Wave (mmWave) Technology

Millimeter Wave (mmWave) technology is an integral part of 5G networks that utilizes higher frequency bands for data transmission. This technology allows for significantly faster data rates and lower latency’s compared to previous wireless generations. By leveraging mmWave technology, 5G networks can deliver high-bandwidth and low-latency’s connections, supporting applications that require real-time interactivity and data-intensive tasks.

These technologies, including CDNs, edge computing, and 5G networks, play crucial roles in reducing latency’s and enabling fast and responsive digital experiences. By leveraging these advancements, businesses can deliver high-performance services and applications, meeting the growing demand for low-latency’s interactions in today’s digital landscape.

Optimizing Websites and Applications for Low Latency

To achieve low latency and deliver fast user experiences, optimizing websites and applications is essential. Here are key strategies for optimizing websites and applications for low latency:

Minimizing Round-Trip Time (RTT)

- Efficient Server-Side Processing

Efficient server-side processing is crucial for reducing latency’s. Optimizing server code and database queries can significantly improve response times. Employing techniques such as code profiling, caching frequently accessed data, and utilizing efficient algorithms can minimize the time taken for server-side processing, resulting in reduced RTT.

- Code Optimization and Caching

Code optimization and caching on the client side can enhance performance and reduce latency’s. Minifying and compressing JavaScript and CSS files, leveraging browser caching, and utilizing content delivery networks (CDNs) for static assets enable faster loading times. Caching mechanisms store data locally, reducing the need for additional requests and minimizing RTT.

Reducing Bandwidth Requirements

- Compression Techniques

Reducing the size of data transmitted over the network can significantly improve latency. Utilizing compression techniques such as GZIP or Brotli for text-based resources like HTML, CSS, and JavaScript can substantially reduce the amount of data transferred. Compressed data requires less time to transmit, resulting in faster loading times and improved latency’s.

- Image and Video Optimization

Optimizing images and videos can greatly impact bandwidth usage and latency. Implementing image compression algorithms, using responsive images to serve appropriate sizes based on device capabilities, and leveraging modern image formats like WebP or AVIF can reduce file sizes without compromising visual quality. For videos, utilizing adaptive streaming technologies like MPEG-DASH or HLS helps deliver optimal quality at lower bandwidth requirements.

Prioritizing Critical Content

- Resource Preloading

Preloading critical resources can improve perceived performance and reduce latency’s. By anticipating the user’s next actions, preloading necessary assets such as CSS, JavaScript, and images in advance, they can be readily available when needed. This reduces the need for subsequent requests and minimizes the delay in rendering crucial content.

- Progressive Rendering

Progressive rendering techniques allow content to be displayed gradually as it becomes available, rather than waiting for the entire page to load. By prioritizing the rendering of essential content, users can start consuming and interacting with the page before all resources have finished loading. This approach enhances perceived performance and provides a more responsive user experience.

Low Latency Challenges and Solutions

While achieving low latency is desirable, several challenges can hinder its realization. Let’s explore some common challenges and their corresponding solutions:

Network Congestion

- Load Balancing and Traffic Shaping

Network congestion can significantly impact latency’s. Implementing load balancing techniques distributes network traffic evenly across multiple servers or paths, reducing congestion and improving response times. Traffic shaping allows prioritizing critical traffic, ensuring that latency-sensitive applications receive the necessary bandwidth for optimal performance.

- QoS (Quality of Service) Management

Quality of Service (QoS) management ensures that critical network traffic receives preferential treatment, minimizing latency’s. By prioritizing specific traffic types, such as voice or video data, QoS mechanisms can allocate sufficient bandwidth and reduce delays caused by congestion. QoS management techniques, such as traffic classification, queuing algorithms, and bandwidth reservation, help maintain low latency’s for essential applications.

Geographic Distance and Signal Propagation

- Edge Nodes and Data Centers Placement

Reducing the physical distance between users and network resources is essential for minimizing latency. Placing edge nodes and data centers strategically closer to end-users can help mitigate latency’s caused by geographic distance. By bringing content and computational resources closer to the network edge, data transmission times are reduced, resulting in improved latency’s.

- Network Optimization Techniques

Network optimization techniques, such as routing optimization and protocol optimizations, help mitigate latency issues caused by signal propagation delays. Optimizing routing protocols minimizes the number of network hops required for data to reach its destination, reducing overall latency’s. Implementing efficient network protocols, such as TCP/IP optimizations or the use of advanced congestion control algorithms, improves data transmission efficiency and reduces latency’s.

Security and Low Latency

- DDoS Mitigation Strategies

Ensuring security while maintaining low latency can be challenging, particularly when facing Distributed Denial of Service (DDoS) attacks. Implementing robust DDoS mitigation strategies, such as traffic filtering, rate limiting, and behavioral analysis, helps protect network infrastructure from attacks without compromising latency’s. Advanced DDoS mitigation solutions can identify and block malicious traffic while allowing legitimate traffic to pass through with minimal latency’s impact.

- Encryption and Secure Protocols

Encrypting network traffic is crucial for maintaining security, but it can introduce additional latency’s. However, utilizing modern encryption algorithms and optimized implementations can minimize the latency’s overhead. Employing hardware-accelerated encryption, implementing forward secrecy, and using efficient encryption protocols like TLS 1.3 can help strike a balance between security and low latency.

Conclusion

In today’s fast-paced digital landscape, low latency’s plays a crucial role in delivering exceptional user experiences. By minimizing delays in data transmission, businesses can provide real-time responsiveness, improve application performance, and enhance user satisfaction.

In this article, we explored the concept of latency’s and its significance. We discussed the factors affecting latency, including network infrastructure, transmission mediums, server response time, and client device performance. Measuring latency’s through techniques like ping and traceroute, as well as utilizing dedicated tools for testing, were also covered.

Leave a Reply